From WWII Codes to Hastings Classrooms: The Past, Present, and Future of Information Theory

Origins: Wartime Codebreakers and the Birth of Bits

Information theory was born in an era of urgent necessity. During World War II, the challenge of reliably transmitting messages – whether coordinating Allied troops or decrypting enemy codes – drove scientists to seek a mathematical understanding of “information.” At Bell Labs in the 1940s, a brilliant American engineer named Claude E. Shannon quietly toiled on this problem informationphilosopher.com. Shannon had a foot in both worlds: by day he worked on secret cryptography projects for national defense, and by night he developed a radical new theory of communication informationphilosopher.com. In 1948, Shannon unveiled “A Mathematical Theory of Communication,” the paper that became known as the “Magna Carta” of the Information Age en.wikipedia.org. This landmark work defined what information really is and how to transmit it even over noisy, error-prone channels scientificamerican.com. What had been seen as distinct technologies – telegraph wires, radio signals, telephone calls, television broadcasts – suddenly fit into one unified framework scientificamerican.com.

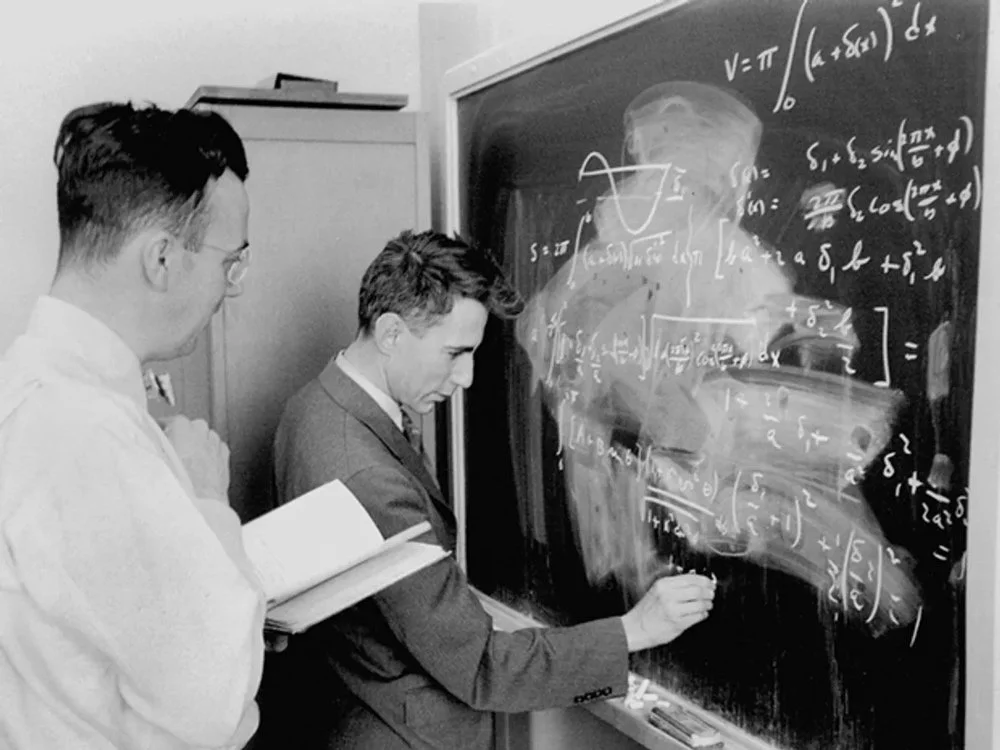

Claude Shannon (right) at Bell Labs during WWII, working on mathematical formulas at a chalkboard. His wartime research on secure communication laid the groundwork for his 1948 paper that defined information theory.

Shannon’s breakthrough was to quantify information as something fundamental – something you could measure in “bits.” A bit is a binary digit, either 0 or 1, and you can think of it like the answer to a yes/no question. For example, imagine a game of 20 Questions: each yes/no answer gives one bit of information, narrowing down the possibilities. Shannon showed that any message – a sentence in English, a photo, a radio signal – can be measured by how many bits it contains. In fact, he borrowed an equation from thermodynamics (entropy) to calculate information content scientificamerican.com. If a message is very surprising or unpredictable, it carries more bits of information; if it’s full of predictable or repeated parts, it carries less. In essence, information was defined as the reduction of uncertainty. This was a revolutionary idea in 1948 scientificamerican.com: it meant engineers could calculate the capacity of a communication channel (like a telephone line or radio frequency) in bits per second and figure out the absolute fastest you can send data without errors scientificamerican.com.

Crucially, Shannon proved that by adding some smart redundancy – extra “error-correcting” bits – even a noisy channel (imagine a static-filled radio or a scratchy phone line) can deliver perfect, error-free messages up to a certain rate scientificamerican.com. This finding was astonishing in the post-war era. It meant that noise and interference need not defeat communication. Engineers just had to encode the data cleverly. Shannon’s colleagues later joked that he had “bottled the magic of communication.” His work immediately influenced how data was sent and stored: everything from early modems to deep-space probes started to use Shannon’s theory to get more data through noisy environments. Shannon himself had drawn inspiration from the needs of wartime – he even met Alan Turing, the British codebreaker famous from Bletchley Park, when Turing visited Bell Labs during the war to collaborate on secure speech encryption informationphilosopher.com. In a sense, the war’s code wizards and radio pioneers lit the fuse, and Shannon’s theory was the bang that launched the Information Age.

Information Theory Today: Powering the Digital World

Fast-forward to today, and Shannon’s once-abstract theory now underpins our daily lives in Hastings and around the globe. We live in a world swimming in bits – and information theory is the unseen engine keeping it all running smoothly. Whenever you stream a video, upload a photo, or text a friend, you’re relying on principles that trace back to that 1940s math. Modern data compression algorithms (think JPEG for images or MP3 for music) are direct applications of Shannon’s idea that you can remove redundancy in data and encode the “surprise” parts more efficiently. If you’ve ever zipped a file to email it, you’ve witnessed information theory in action – the zip program finds patterns and repetition it can pack down, squeezing out the unnecessary bits like air from a bag. This is possible because Shannon gave us the theoretical limit of compression: in essence, the shortest possible description of the data without losing meaning.

Error correction is another everyday miracle from information theory. Our Wi-Fi and mobile phones use error-correcting codes so robust that even if a signal is distorted (say, by a storm or a wall in your house), your device can reconstruct the original message. It’s as if every text or email you send carries a whisper of extra secret instructions that allow the receiver to check and fix errors. Engineers often explain it with a simple analogy: imagine sending a message where each critical word is repeated three times – if one copy gets smudged, the other copies can help fill the gap. Real error-correcting codes are more sophisticated, but the idea is the same. Shannon’s theory tells us exactly how many extra bits are needed for a desired level of reliability on a given channel scientificamerican.com. The result is that modern communications – from DVDs to deep-space satellite links – achieve error-free transmission rates once thought impossible. It’s no exaggeration to say that without information theory, the internet as we know it would not exist. In fact, Shannon’s work has been explicitly credited as a foundation for inventions like the compact disc, the Internet, and even mobile phone networks en.wikipedia.org.

But information theory isn’t just about telecommunications and computers. It has become a lingua franca across sciences – including the science of intelligence, both artificial and natural. In the field of artificial intelligence (AI) and machine learning, concepts from information theory play a key role. For example, when training an AI model, researchers use a quantity called “cross-entropy” (a term rooted in Shannon’s entropy) to measure how well the model is predicting data. If the model’s predictions carry more surprise (more information) than expected, the cross-entropy is high, and training algorithms tweak the model to reduce it. Essentially, the AI is learning to compress the patterns in data – much like our zip file analogy, it’s distilling a large dataset into a smaller model that still captures the important structure. This is why some AI researchers say that intelligence could be viewed as a form of compression: a brain or a computer finds shortcuts to represent the world without storing every detail. When a language model (like the AI behind ChatGPT) learns from billions of sentences, it’s extracting the informational essence of language. Shannon’s legacy is hiding in plain sight every time your smartphone’s autocorrect learns a new word or your streaming service recommends a show you might like.

Perhaps even more striking is how information theory has illuminated neuroscience and the human brain. Not long after Shannon’s papers, scientists began asking: if we have a theory for messages sent through wires, what about messages sent through nerves? After all, our senses – eyes, ears, skin, etc. – are constantly sending a torrent of electrical signals to the brain. It turns out the nervous system can be viewed as an information channel britannica.com. Our sensory organs encode sights and sounds into neural pulses (bits, in a way) that travel through noisy biological circuits. Researchers have tried to measure how many bits per second the human brain processes, especially during conscious activities like reading or listening to music. The results were humbling: even during intense mental tasks, we seem to process under 50 bits per second consciously britannica.com. (For comparison, a basic home internet connection might be 50 million bits per second!) Amazingly, our eyes alone send about 10 million bits per second of raw data to the brain britannica.com – but the brain filters and compresses this torrent down to the tiny trickle that we actually experience consciously britannica.com. It’s as if the brain is an extreme data compressor, throwing away heaps of details and extracting just the key information needed for us to survive and make decisions. This insight has led to theories like “predictive coding,” which suggest the brain is constantly guessing what it will see or hear next and only the unexpected information really registers (in other words, the brain cares about the bits that surprise it).

Neuroscientists in Minnesota and around the world are using Shannon’s formulas to estimate the information content of neural signals, to optimize brain-computer interfaces, and even to understand disorders. For example, how much information can a single optic nerve carry? How many distinct signals can our memory store? These sound like philosophical questions, but information theory lets scientists frame them quantitatively. Here in Hastings, every biology student learning about neurons as “electrical impulses” is unwittingly touching on information theory basics. The Hastings High School STEM classes and robotics club implicitly deal with these ideas when they discuss how sensors on a robot convert real-world measurements into binary data. Information theory has truly become a bridge between engineering and life sciences: it’s helping us see the brain as an organic information-processing machine, one that follows the same math that governs a fiber-optic cable or a Wi-Fi router.

The Next Frontier: Quantum Information and Brain-Computer Interfaces

As revolutionary as the digital bit has been, we’re now entering an era where information theory is encountering new frontiers that Shannon could only dream of. One major frontier is quantum information science. If classical bits are like light switches (on/off), quantum bits, or qubits, are like magic coins that can be both heads and tails at once (at least until you look at them). Quantum computers encode information in the strange states of subatomic particles, following the counterintuitive rules of quantum mechanics. This allows them to process information in ways that defy classical limitations. For instance, two qubits can embody four possible combinations at once (00, 01, 10, 11), ten qubits can represent $2^{10} = 1024$ states at once, and so on – an exponential leap. Quantum information theory extends Shannon’s ideas to this realm, asking how much information can be packed into entangled particles and how error correction works when “noise” comes from quantum uncertainties. Researchers have already defined quantum analogues of Shannon’s channel capacity – essentially calculating how many qubits per second can be reliably sent through a fiber or stored in a quantum memory.

Why does this matter? Because quantum computing promises to tackle problems that classical computers find intractable. Imagine an AI trying to simulate complex chemistry for a new drug: a classical computer might take centuries, whereas a quantum computer (leveraging qubits that can explore many possibilities simultaneously) could in principle crack it much faster dornsife.usc.edu, dornsife.usc.edu. Experts predict that in the next decade, quantum information tech could help discover new materials and medicines, advance our understanding of diseases, and turbo-charge AI by generating huge amounts of training data or solving optimization problems that stump today’s machines dornsife.usc.edu. It’s like having a supercharged brain at our disposal, one that thinks in parallel universes of possibilities. There’s also a flip side: quantum communication can be ultra-secure – scientists are already using quantum encryption where any eavesdropper’s attempt to intercept a message will disturb the quantum states and be noticed. At the same time, powerful quantum computers could crack today’s standard internet encryption like a hot knife through butter dornsife.usc.edu. This has spurred a global race for post-quantum cryptography, new coding schemes that even a quantum codebreaker can’t break dornsife.usc.edu. In short, the future of secure banking, national security, and even daily privacy may rest on advances in quantum information theory. Minnesota’s own universities are active in quantum research; one can imagine a day when the Twin Cities become a hub for quantum tech startups, and Hastings students trained in information theory might find themselves working on the next quantum algorithm or device.

Another thrilling frontier at the intersection of information theory, computing, and neuroscience is the rise of brain-computer interfaces (BCIs). These are systems that create direct communication pathways between a human brain and an external device – effectively translating neural information (the firing of neurons) into digital signals and vice versa. It sounds like science fiction, but it’s quickly becoming science fact. In 2024, for example, researchers at UC Davis announced a brain-computer interface that allowed a man with ALS (a condition that causes paralysis) to communicate just by thinking. The BCI translated his brain’s electrical intentions into spoken sentences on a computer with up to 97% accuracy health.ucdavis.edu. Imagine the joy of hearing one’s “voice” again after disease had stolen the ability to speak – and imagine the information theory challenge here: decoding the electrical chatter of thousands of neurons and identifying the information corresponding to intended words. That system relied on advanced algorithms distinguishing signal from noise – essentially Shannon’s problem, but inside the brain.

Closer to the present, companies like Neuralink (founded by Elon Musk) have grabbed headlines by implanting chip electrodes in brains; in fact, the first human trial of a high-profile neural implant was approved and begun in 2023, bringing BCIs out of the lab and into real-world testing technologynetworks.com. These devices aim to do everything from restoring movement to paralyzed patients, to giving voice to those who can’t speak, to even one day augmenting human cognition (Musk talks about “merging” with AI to keep up with intelligent machines). Here in Minnesota, the Mayo Clinic and University of Minnesota have neuroscience programs that experiment with BCI technologies, and it’s easy to see how information theory underlies all of it. A brain-computer interface is essentially a communications channel between mind and machine. The brain’s signals are full of noise and complexity; decoding them is like trying to understand a cacophony of simultaneous radio stations. Information theory guides engineers on how much data from the brain is needed to control a cursor on a screen, or how to compress neural data for wireless transmission from an implant. It even helps set theoretical limits: for example, how many bits per second can we extract from the motor cortex of a human brain to control a prosthetic arm? Answering that helps design better BCIs.

Beyond medicine, BCIs raise big societal and ethical questions – issues that communities like Hastings will surely discuss in the coming years. If someday healthy people can get brain implants to enhance memory or immerse into virtual reality by thought, what does that mean for education and equity? These might sound far-off, but consider that just a generation ago, the internet itself seemed like a wild idea. The pace of tech evolution is rapid. Our community might see, within a decade or two, residents who control devices via thought or who benefit from neural prosthetics. It’s a development that sits squarely at the crossroads of technology and humanity – and once again, it’s built on the foundation Shannon laid. Just as his theory once helped send radio messages securely to troops, now it helps send messages from a human mind to a computer cursor, or from a camera straight into a blind patient’s brain (another BCI application being trialed). The frontier is as inspiring as it is challenging. And it reminds us that information is a very general concept – whether it’s bits in a fiber cable, qubits in a quantum computer, or neural spikes in our brain, the same core principles apply.

Information Theory, Consciousness, and the Simulated Universe

Information theory doesn’t stop at engineering and biology – it’s begun to influence the deepest questions about reality itself. In recent years, scientists and philosophers have been intrigued by the connections between information and consciousness. One prominent theory, called Integrated Information Theory (IIT), even proposes that consciousness just is a certain kind of information structure psychologytoday.com. According to IIT, if a system (like a brain, or potentially even a sophisticated computer) has a high degree of integrated information – meaning all its parts are highly interactive and interdependent in processing information – then that system generates consciousness. In plain language, the theory suggests that the feeling of being conscious arises when information is woven together tightly. A simple light sensor or a computer might have only a tiny bit of this integration, so it’s not conscious. A human brain, which integrates billions of signals, has a very high integration, so it has a rich conscious experience. This idea is controversial (and still being tested and debated), but it’s fascinating because it implies that consciousness could be measured in principle – perhaps in units of bits! It also provocatively hints that consciousness might not be an all-or-nothing property; different creatures or systems could have different degrees of it depending on their information architecture psychologytoday.com. Some have even interpreted IIT as a form of panpsychism – the philosophical view that consciousness is a fundamental feature of the universe, present even in simple systems in tiny amounts psychologytoday.com. Whether or not IIT is correct, it exemplifies how far Shannon’s legacy has reached: from enabling better telephone calls all the way to trying to explain why we have first-person experiences.

Then there’s the even wilder idea that reality itself might be made of information – or even be a kind of grand simulation. This notion has been popularized by philosophers like Nick Bostrom and tech visionaries, and it got a pop-culture boost from The Matrix films. The basic argument (in Bostrom’s Simulation Hypothesis) is a bit of a head-spinner: if it’s possible for an advanced civilization to create a perfectly realistic simulation of a universe, and if such civilizations would be interested in doing so, then there might be many more simulated worlds than real ones – so statistically, we could be inside a simulation right now. Some scientists have taken this seriously enough to analyze it in probabilistic terms. A Columbia University astronomer, David Kipping, recently used Bayesian statistics (a way to update probabilities with evidence) to examine Bostrom’s argument. His conclusion: given the assumptions, the odds that we are living in a base physical reality versus a simulation are roughly a coin flip – about 50–50 scientificamerican.com. In other words, there is no strong evidence either way, so it might be just as likely we’re in a giant cosmic computer program as not. Moreover, Kipping found that if humanity ever does create a simulation containing conscious beings, then the odds would dramatically tip – it would become almost a certainty that we ourselves are in a simulation created by someone else scientificamerican.com. (Why? Because if we prove it’s possible to make such simulations, then by the same logic, there could be layers upon layers of simulated worlds.) It’s a staggering thought: our quest to process information and create AI might eventually force us to question the nature of our own reality.

Before dismissing this as pure science fiction, it’s worth noting that the idea of reality as an illusion or construct isn’t new. Philosophers from both West and East have wondered about this for millennia – from Plato’s Allegory of the Cave (where people mistake shadows on a wall for reality) to Zhuang Zhou’s Butterfly Dream (wondering if he was a man dreaming of a butterfly or a butterfly dreaming of a man) scientificamerican.com. In a sense, the Simulation Hypothesis is a high-tech echo of these age-old ideas. The difference now is that we can frame hypotheses about reality in terms of information processing. If our universe is a simulation, then at some fundamental level it’s composed of bits – like a giant video game running on cosmic hardware. Even some physicists have leaned toward an “it from bit” viewpoint, a phrase coined by John Archibald Wheeler, meaning that physical things (“it”) ultimately arise from information bits. They point to strange coincidences in physics: for example, the fact that particles seem to “decide” their state when observed (as in quantum mechanics) might hint that reality has a kind of informational underpinning, as if outcomes are computed on the fly. While no definitive evidence of a simulation exists (and indeed, other scientists strongly argue that the universe is not a simulation), the conversation itself is valuable. It brings together computer science, physics, and philosophy in a quest to understand if there’s a deeper level of truth beneath what we perceive. And crucially, it’s another realm where information theory finds relevance. If one day we were to seriously test the simulation theory, we’d be looking for informational artifacts – maybe subtle glitches or limits in the fabric of physics that reveal an underlying “code.” Already, some have mused about things like the maximum amount of information that can be stored in a black hole (known as the Bekenstein bound) or the pixelation of space at Planck-scale distances as possible clues. These ideas are speculative, yes, but they capture the imagination. They inspire us to ask, what really is information? Is it just an abstract tool, or is it the stuff reality is made of?

For a local community like Hastings, discussions about consciousness and simulated universes might seem far removed from daily concerns. But these big ideas have a way of trickling down and shaping culture, education, and our sense of possibility. High schoolers pondering the nature of the mind in philosophy club, or a book club at the public library reading a sci-fi novel about living in a simulation – these are ways global ideas become local conversations. And engaging with such questions can be inspiring and enriching; they remind us that even a small riverside city like ours is connected to the grandest intellectual adventures humanity is undertaking.

Bringing It Home to Hastings: Why Information Theory Matters Locally

You might be wondering, “This is fascinating—but what does Hastings, Minnesota have to do with information theory?” The answer is: everything. Bits and bytes quietly shape our daily routines. Hastings High students learn on school-issued iPads, families binge-watch shows over fiber, and small businesses crunch data to track inventory or target social-media ads. All that happens on the invisible rails Claude Shannon laid down. When the city expands broadband or the library boosts its public Wi-Fi, we’re really widening an information super-highway. Even the smart sensors on the Highway 61 bridge rely on error-free data to monitor structural health—proof that high-quality information infrastructure translates directly into quality of life, from remote work and tele-medicine to rapid emergency response.

Local STEM Sparks

Our community is already nurturing the next generation of “bit wranglers.”

Hastings Middle School tech-ed classes roll out Ozobots and Finch robots, letting sixth- to eighth-graders race tiny machines down taped tracks while learning the logic of code.

Over at St. Elizabeth Ann Seton School, a FIRST LEGO League team designs autonomous LEGO bots for statewide challenges—an accessible on-ramp to engineering for 5th- through 8th-graders.

Community Education fills summer days with “Robot Olympics” camps, where elementary students program wheeled robots to navigate obstacle courses in the HMS South Gym.

Together these programs teach feedback loops, sensor fusion, and efficient algorithms—the very concepts that power search engines, Wi-Fi, and AI. Hastings doesn’t yet field a varsity-level FIRST Robotics Competition team, but regional mentors say it could launch within a season. Imagine the buzz when a purple-and-gold robot emblazoned HASTINGS RAIDERS rolls into a Twin Cities arena, its codebase built on Shannon’s principles.

Knowledge Hubs & Public Curiosity

The Pleasant Hill Library is becoming our civic nerve center for digital literacy. It already lends Wi-Fi hotspots; next up could be coding clubs for kids, “Intro to AI” talks for adults, or a “Data Privacy 101” series on staying safe online. Picture a March 14 “π Day” event where residents encrypt secret messages on Raspberry Pi computers, or a “World Quantum Day” guest lecture from a University of Minnesota researcher demystifying qubits. These gatherings turn big ideas into shared civic experiences.

Economic Upside & Rural-Urban Synergy

Hastings may be small, but we sit inside a growing Twin Cities tech corridor. Strengthening local talent in information science prepares residents for high-wage jobs—whether in cybersecurity firms across the river or remote roles for Fortune 500 companies. Meanwhile, surrounding farms adopt precision-agriculture drones and GPS-guided tractors, both fueled by data analytics. Embracing information tech lets Hastings boost yields in its fields and bandwidth for its remote workers.

Igniting a Culture of Inquiry

Hosting public forums on questions like “Can we measure consciousness?” or “Will quantum computers rewrite medicine?” signals to young people that Hastings values their curiosity. A 15-year-old who attends an encryption workshop today could pursue a cybersecurity career tomorrow; a YMCA hackathon might spawn the city’s next app start-up.

A River Town of Digital Currents

Our town once thrived on the physical flow of riverboat news and goods; today we stand on the banks of a digital river that carries information at light speed. By understanding—and championing—information theory, we honor that legacy of connection in a modern form.

Information theory isn’t just abstract math. It’s the story of humanity mastering the intangible—protecting wartime secrets, birthing the internet, decoding the brain, and perhaps even hinting at the nature of reality. That story stretches from Claude Shannon’s chalk-dusted lab to a Hastings middle-schooler debugging robot code after class. By embracing it as a community, we position Hastings not just to adapt to the future but to help invent it. Somewhere in town, the next Shannon may already be soldering a circuit and dreaming in bits; let’s give them every tool—and every byte— they need.

Sources:

Scientific American – Claude Shannon’s 1948 paper unified how we send information across telegraph, telephone, radio and TV scientificamerican.com and defined information in terms of bits using an entropy formula scientificamerican.com.

Information Philosopher – During WWII, Shannon worked on cryptography at Bell Labs and even collaborated with Alan Turing informationphilosopher.com, shaping his ideas for his later groundbreaking paper.

Wikipedia – Shannon’s theory laid the foundations for CDs, the Internet, mobile phones, and even influenced physics (black hole information) en.wikipedia.org. He’s often called the “father of information theory.”

Britannica – Researchers found the human conscious mind processes only ~50 bits/sec even though our senses send 11 million bits/sec to the brain, implying massive information compression in our perception britannica.combritannica.com.

UC Davis Health – In 2024, a brain-computer interface let a man with ALS “speak” via a computer by translating his brain signals to speech with 97% accuracy, a huge leap for neurotechnology health.ucdavis.edu.

USC Dornsife – Quantum computers use qubits (0/1 at once) and could revolutionize drug discovery, AI, and materials by solving problems much faster than classical computers dornsife.usc.edu, dornsife.usc.edu. They also pose risks to current encryption, spurring new security research dornsife.usc.edu.

Psychology Today – Integrated Information Theory posits that consciousness arises from integrated bits of information; more interconnected information = more consciousness, a bold and debated idea psychologytoday.com.

Scientific American – Some experts argue there’s roughly a 50–50 chance our reality is a computer simulation. If we ever create simulated conscious beings, it would strongly suggest we ourselves are simulated scientificamerican.com, scientificamerican.com.